Is Continuous Optimization of an Asset Possible?

Unplanned downtime is still one of the predominant costs in a manufacturing operation despite the advances available in predictive and condition-based maintenance technologies. Even though the focus of proactive maintenance has been to catch symptoms of failure early, infer the probable causes from the symptoms, and take proactive actions to prevent the failure, only limited success has been achieved compared to the previous generation of predictive maintenance approaches.

This is mainly due to the treatment of the asset in question as a “black box.” Without the knowledge of the internal state of the asset and state transitions that take place between the sub-processes of the asset during operation, it is not possible to exhaustively infer the real cause and/or potential for a failure. In the past, even if a failure was avoided because a symptom was treated via predictive maintenance, the actual cause was not addressed.

The reality is that an asset will fail unexpectedly when some failure-inducing condition in its past operational behavior has gone undetected. That’s why detection of sub-optimality is required not only to avoid unexpected failures, but also to achieve superior performance. This is where the idea of continuous asset optimization enters the picture. With the advent of Industry 4.0 technologies, it is now possible to reasonably strive towards dynamically achieving asset optimality given the current capability to generate meaningful and contextual information on asset performance in real time.

Traditional Big Data analytics apply deep learning for strategic optimization to enable decisions that will have a significant impact in a month, six months, or a year. This requires a plethora of past data to identify hidden patterns of asset performance. Continuous asset optimization differs in that it can achieve asset optimization in a relatively immediate future time frame, such as the remaining period in a shift, the next shift, or the next day. And it can do this without the need for a significant amount of historical data, making it more tactical and operational. This realtime approach to asset optimization also ensures that the behavior of each component/sub-process associated with every critical asset is understood within its wider operational context. By modelling (based on first principles physics) the interaction between the behaviors of components of a process/ asset, future behaviors can be predicted and optimized using artificial intelligence techniques and operating costs can be significantly reduced.

Such continuous and practical optimality in assets can be achieved in a standard and systematic manner via digital twins. Digital twins have been around for almost a decade now, but their applications have evolved since their first definitions.

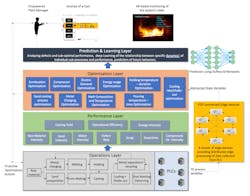

The Digital Twin framework shown comprises four layers: operations, performance, optimization, and prediction. The operations layer connects to the assets in the plant and collects real time data from the assets and sub-processes. The performance layer computes the performance of the assets from the collected data. The optimization layer applies the data from the operations layer and the key performance indicators computed in the performance layer to models of specific sub-processes of the assets. The individual optimizations computed by the model are then fed as inputs to a predictive layer which reconciles the sub-process optimizations to arrive at an overall asset/process optimization. Communication between the layers happens opportunistically and dynamically to ensure that the assets are continuously optimized. That’s how a significant component of the digital twin can be achieved using a minimalist network infrastructure, edge computing, IoT hardware, and intelligent firmware/ application software, keeping overall implementation costs within affordable levels.

About the Author

Dr. Ananth Seshan

Member, International Board of Directors, MESA International

Leaders relevant to this article: