Welcome to the first installment in a new series of content from Automation World. This Peer-to-Peer FAQ series will focus on explaining the most common and trending technologies in the world of industrial automation.

This first installment focuses on sensors. Because industrial sensors are so numerous, we are focusing on machine vision sensors, smart instrument sensors, and the application of artificial intelligence (AI) to industrial sensor data.

Each installment in this Peer-to-Peer FAQ series will highlight succinct yet detailed explanations of each technology, followed by insights from end users and integrators about their selection, implementation, and use.

Machine vision refers to a combination of imaging, processing, and communication components integrated into a system for inspecting and analyzing objects. Required components of the image-capture system include lighting to illuminate objects, a camera for capturing images, and a CMOS-compatible vision sensor, which converts light captured through the camera lens into an electrical signal that can be processed as digital image output. Once an image has been digitized, it is sent to an industrial computer or other image-processing device equipped with software capable of using various algorithms and other methods to identify patterns in the objects being viewed.

Once a machine vision system is in place, an object sensor is used to detect when a part or component is present. From there, the sensor triggers the light source and camera, which captures an image of the object. The vision sensor then translates the image taken by the camera into a digital output. Finally, the digital file is saved on a computer so that it can be analyzed by the system’s software, which compares the file against a set of predetermined criteria to identify some property of the object, such as what type of product it is, or if it has any defects.

Various types of vision sensors can be used depending upon the machine vision application. These include:

- Contour sensors. By inspecting the shape and contour of a passing item in comparison to other items on an assembly line, these sensors can be used to verify the structure, orientation, position, or completeness of an object. Often, contour sensors are used for tasks such as verifying the proper alignment of parts.

- Pixel counter sensors. Used to measure objects by counting the individual pixels of identical gray-scale values within an image, pixel counter sensors are able to determine the shape, size, and shade of items. Possible applications include identifying missing threads in metal parts, verifying the correct shape of injection molded products, and counting the number of holes within a rotor.

- Code readers. These sensors recognize barcodes and other 2D codes for reading product packaging labels and sorting products by serial number for inventory management purposes.

- 3D sensors. Unlike other vision sensors, 3D sensors are capable of analyzing the surface of an object and its depth. For instance, a 3D sensor can determine if a crate has a full quota of bottles within it or if the proper number of items has been stored on a pallet.

Integrators responding to an Automation World survey for this FAQ installment listed the following errors as the most common ones they see with machine vision implementations:

- Incorrect mounting of the camera/sensor for the given setup;

- Not compensating or correcting for moisture on the lens;

- Improper center distance from the sensor;

- Lack of precision in 3D point selection; and

- Lack of lighting compensation for proper detection for metallic or shiny objects.

As part of Automation World’s research on this subject, we asked system integrators to share their top recommendations for the selection and implementation of machine vision. When it comes to the selection process, eight key aspects were clear:

- Evaluate the sensor type used in the camera—CMOS or CCD. CMOS tends to be preferred over CCD because of its flexibility and greater range of options.

- Determine the need for color. If color is not necessary to the machine vision application, go with a monochrome version or mode.

- Consider the machine vision system’s compatibility with existing systems and other installed technologies with which the machine vision system will operate.

- Evaluate the choice of PC-based or embedded vision options. Embedded vision systems are often easier to integrate into existing systems, as they tend to be smaller. Because embedded vision systems tend to be housed in one device, they have few moving parts and require less maintenance.

- Don’t overlook granular details such as sensing distance and accuracy. Such factors can have dramatic impacts on the applications for which the machine vision technology is initially installed and its adaptability to process changes.

- Ensure Industrial Internet of Things (IIoT) connectivity. This includes available Ethernet ports as well as applicability of various industrial communications protocols.

- Environmental operating conditions. Verify that the machine vision systems you’re investigating are guaranteed to function in your operating environment.

- Reference the VDI/VDE/VDMA 2632-2 standard for machine vision applications. This standard provides a guideline for machine vision technology suppliers and users related to setting up a machine vision system for specific tasks.

Compared with machine vision selection recommendations from integrators—which tend to focus on core feature/functionality aspects, reader recommendations spotlight application tips and advice. Most commonly, readers focused on ease of use and solid tech support from the vendor. These two factors were cited most often, as vision system accuracy and correct configuration are critical to successful application of the technology.

Beyond these recommendations, 13 other pieces of application advice were recommended:

- Be very clear about the current process requirements, as they help narrow down what to expect from the vision system.

- Develop an in-house vision team to help in the selection and programming of the vision system(s). Similarly, other respondents recommended having a clear internal owner who’s in charge of support and changes before going into production with the vision system.

- Realize the bigger picture—with vision systems you can go beyond rejecting defective parts. The data you collect about defects gives you the opportunity to identify and fix performance issues on your production line.

- The production process should allow ample time for inspection by the vision system best suited to your application.

- Make sure the camera mounting does not interfere with operator interaction with the machine, such as removing failed parts, fixing jams, or performing maintenance.

- Look for a machine vision system with an intuitive and easy to understand operator interface.

- Make sure the vision system works with your room lighting and be prepared to add lighting as needed.

- Find a good vision system integrator—successful setup of machine vision often requires a good integrator.

- The vision system should have data trending capabilities and be able to provide real time data to the process equipment to allow for making changes on-the-fly.

- Record and store pass/fail data for continuous improvement.

- Spend the money for the best but be mindful that price doesn't necessarily equal value. One respondent noted: “Shiny metal pieces can be difficult to detect” regardless of which system you choose.

- Explore machine vision start-ups to access the latest capabilities. “This space is evolving quickly with lower costs alternatives,” noted one respondent.

- Take the time to do a proof of concept with two to three vendors to compare results and drive competition among suppliers.

Smart instruments sensors

Smart instruments are typically defined as instruments that have been imbued with measurement and diagnostic capabilities beyond their primary function. For instance, a device such as a Coriolis flow meter would qualify as a smart instrument because it is capable of measuring mass, volume, and density simultaneously. To do this, smart instruments rely on intelligent sensors capable of interfacing with onboard microprocessors. In addition to the sensor, a transmitter gives the instrument the ability to communicate data over a network.

Ultimately, the intelligent sensors that drive smart instruments record the same process variables such as level, temperature, pressure, and proximity as analog base sensors. However, a base sensor is calibrated to send a voltage signal directly corresponding to one of these process values to an external control system which then interprets and acts on the information. By contrast, an intelligent sensor uses its own onboard microprocessor to convert the data into a usable, digital format before any communication to external devices occurs. In other words, whereas a base sensor’s output is raw and must be converted into a usable format by an external device, an intelligent sensor produces output that is ready-to-use. Similarly, because the intelligent sensor does not need to pass analog voltage signals to an external control system, it can process, record, and transmit multiple variables simultaneously.

Moreover, because smart instruments can process data natively, they can also bypass lower-level control systems to transmit information directly over the internet or a private digital network, saving space, power, and computational resources. This also allows operators to access maintenance, calibration, and commissioning data via mobile devices such as phones or tablets, rather than having to visit fixed workstations.

Finally, smart instruments are capable of engaging in self-diagnostics and can report potential calibration issues to operators before they occur. By assessing their own outputs against traceable and well-established calibration standards, smart instruments can self-confirm the quality of the data they produce. Like their ability to record and transmit multiple process variables simultaneously, this would not be possible without intelligent sensors capable of interfacing with an embedded microprocessor.

Usage recommendations

While smart instruments may be “smart” in terms of the additional insights they can provide about the processes they’re monitoring, they still need handholding when it comes to successful implementation and use.

Integrators responding to our survey noted the following as their top seven pieces of advice when it comes to selecting smart instruments with advanced sensing technology:

- Be sure to review the manufacturer's specifications to ensure the instrument can deliver the results you’re seeking.

- Verify the instrument’s accuracy and repeatability.

- Carefully review climatic conditions requirements.

- Make sure the instrument has the communication options you seek now and, potentially, in the future; i.e., open communication options are often preferred.

- Ensure that the instruments are compatible with existing systems in your facility.

- Choose an integrator with proven experience working with the sensors and instruments you select.

- Expect to spend more money than planned when bringing your first smart instrument systems into use.

Application of AI to sensor data

Artificial intelligence can play a substantial role in making use of the data collected by sensors. For example, in machine vision, where the images captured cannot be analyzed without a software algorithm, this process often entails the use of fixed rules determined by a human programmer and do not qualify as AI-driven. In these cases, data is extracted from object images in the form of various criteria, such as measurement or object type, and then compared against pre-established target values to make a decision pertaining to the object.

In contrast, AI-driven machine vision quality inspection relies on deep learning technology, which employs a deep neural net (DNN) inspired by real biological processes to ingest and process large amounts of visual data. Through the use of a DNN, a machine vision system can autonomously create rules that determine the combination of properties that differentiate quality products from those with defects. This not only allows the system to learn how to detect more subtle and qualitative imperfections, but it may also improve the flexibility of quality inspections systems by allowing reconfiguration to proceed autonomically. In essence, an AI-driven machine learning system could generate its own set of rules for determining whether a new product should pass or fail inspection merely by viewing a set of images of both good quality and defective items.

Robotic pick-and-place operations on a production line can similarly benefit from AI-driven machine vision. Often, robots performing these operations are trained using teach pendants or hand-guiding procedures that require operators to manually guide the robot arms through each step in a standardized operation. If a work-cell configuration or the items to be handled change, the robots must be manually reprogrammed. However, when an AI-driven machine vision system is used for guidance instead, robots can automatically calibrate themselves. This functionality is particularly useful in environments with rapid changeovers or a high-mix of different products, which mandate frequent reconfiguration.

AI application trends

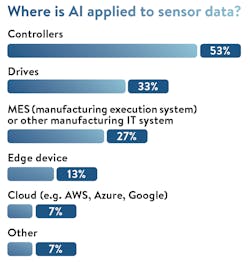

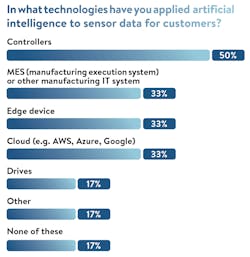

Among the more interesting insights derived from our research for this article are the differences in the types of devices or systems using AI on sensor data cited by end users and integrators. For integrators, the top three AI-enabled systems being applied to sensor data are: controllers (50%), manufacturing execution systems (33%), and edge devices and cloud applications (tied at 33% each). For end users, the top three AI-enabled technologies are controllers (53%), drives (33%), and manufacturing execution systems (27%).

End users noted the following specific types of applications in which they are using AI:

- Component and product inspection;

- Overall asset health;

- Part and product positions on conveyors;

- Quality inspections; and

- Torque, temperature, and vibration analyses.

Looking beyond general applications of AI to sensor data, integrators also had several tips about specific application types that can be especially useful. These tips include:

- Choose AI technologies that can be applied to multiple processes.

- Start small with a couple of your most critical/valuable assets; don't try to boil the ocean.

- Leverage existing data with AI before adding more sensors, as you may already have enough data to make a positive impact.

- Take advantage of new cloud-based AI/ML (machine learning) services that can simplify and/or accelerate model development and deployment.

- Explore AI technology startups focused on industrial data.

- Seek out technologies that provide for flexibility in changing AI algorithms; this can be particularly useful in applications requiring micron-level accuracy.

- Be generous with your database sizes.

Most importantly, integrators advised manufacturers to “get started ASAP” in applying AI to sensor data. With more than a quarter of the market already using it, those who lag in AI adoption could find themselves at a significant disadvantage from an operational uptime viewpoint as well as in longer-term strategic decision-making capabilities.