Though the use of artificial intelligence (AI) is increasing across the industrial automation spectrum, many in industry are still unclear about its application and benefits. This is not surprising considering that AI applications are not something most end users will ever knowingly directly interface with. AI tends to work behind the scenes, processing inputs and actions to streamline the functions of the systems that employ it.

Even though you may not need to learn how to interact with AI, having a basic understanding of how it works will likely be as important as understanding how to setup a Wi-Fi network in your home. We’ve all learned so much about Wi-Fi networks because of how much we depend on them. The same will likely be said of AI in the near future.

A few months ago, Automation World connected with Anatoli Gorchet, co-founder and chief technology officer at Neurala (a supplier of AI vision software), to better understand how artificial intelligence (AI) works in industrial inspection processes using machine vision. Following the publication of Gorchet’s insights, we learned about another AI term we were not familiar with—explainability.

According to Versace, explainability is “critical for debugging. No matter how explainable AI turns out to be, nobody will ever deploy a solution that makes tons of mistakes. You need to be able to see when AI fails and why it fails. And explainable AI techniques can help you determine whether the AI is focusing on the wrong things.”

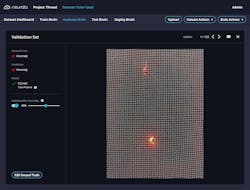

As an example, Versace said to consider a deep learning network deployed on industrial cameras to provide quality assurance in a manufacturing setting. “This AI could be fooled into classifying some products as normal when, in fact, they are defective. Without knowing which part of the image the AI system is relying on to decide ‘good product’ vs. ‘bad product,’ a machine operator might unintentionally bias the system,” he said. “If they consistently show the ‘good product’ on a red background and the ‘bad product’ on a yellow one, the AI will classify anything on a yellow background as a ‘bad product.’ However, an explainable AI system would immediately communicate to the operator that it is using the yellow background as the feature most indicative of a defect. The operator could use this intel to adjust the settings so both objects appear on a similar background. This results in better AI and prevention of a possibly disastrous AI deployment.”

Beyond this kind of application, explainability also enables accountability and auditability, said Versace. It can help answer who designed the system as well as how it was built and trained.

“At the end of the day, humans are offloading key decisions to AI,” said Versace. “And when it comes to assessing trust, they take an approach similar to that of assessing whether or not to trust a human co-worker. Humans develop trust in their co-workers when at least two conditions are satisfied: their performance is fantastic and they can articulate in an intelligible way how they obtained that outcome. For AI, the same combination of precision and intelligibility will pave the way for wider adoption.”

Leaders relevant to this article: