Earlier this year, Automation World met with the co-founders of Veo Robotics, Clara Vu and Patrick Sobalvarro, about their approach to collaborative robots (cobots). As described in the article, Making Industrial Robots Collaborative, we explained that Veo Robotics’ approaches cobot development by making industrial robots capable of working alongside humans, rather than developing specialized collaborative robots similar to most of the robots that comprise the bulk of the cobot market.

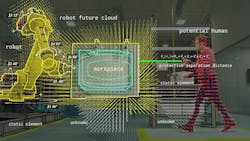

In that article, we described Veo Robotics’ FreeMove system, which uses multiple camera sensors and an algorithmic computing platform to transform industrial robots into cobots. Now, Clara Vu is offering more insights into Veo Robotics’ application of the ISO speed and separation monitoring standard to achieve this.

Cobot standards

The first thing to understand is that speed and separation monitoring (SSM) is one of four standard methods for robotic collaboration. The other three are: safety-rated monitored stop, hand guiding, and power and force limiting.

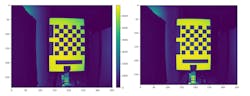

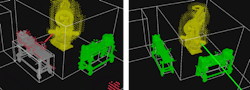

To create a safe perception system for its robots, Veo Robotics’ FreeMove system uses 3D time-of-flight sensors positioned on the periphery of the work cell to capture “rich image data of the entire space,” said Vu. “The architecture of the sensors ensures reliable data with novel dual imagers that observe the same scene so the data can be validated at a per-pixel level. With this approach, higher level algorithms will not need to perform additional validation. This 3D data can then be used to identify key elements in the work cell, including the robot, workpieces, and humans.”

Safety system requirementsBecause dark fabrics may not always be accurately detected by active IR sensors, the FreeMove system was designed to classify collaborative robotic workspaces as one of three states: empty, i.e., something can be seen behind it; occupied; or unknown.

“When examining volumes of space, if the sensors do not get a return from a space but cannot see through the space, that space is classified as unknown and treated as occupied until the system can determine it to be otherwise,” explained Vu.

About the Author

David Greenfield, editor in chief

Editor in Chief

Leaders relevant to this article: