Despite the advance of automation technologies into virtually every realm of manufacturing, quality inspection remains a task commonly reserved for humans. One of the primary reasons for this is that factors such as random product placement, atypical defects, and variations in lighting can be problematic for traditional machine vision systems. This difficulty is amplified in cases where the component being inspected is part of a larger assembly or complex package.

But just as machine vision systems have their inspection-related challenges, so too do humans. Studies have shown that most operators can only focus effectively on a single task for 15 to 20 minutes at a time. Add this to the problem manufacturers face in filling open assembly jobs and there are clear pain points to relying solely on humans for all quality inspection processes.

To help address this issue, Cognex has been working with manufacturers in the automotive, consumer packaged goods, and electronics industries to optimize its machine vision deep learning software for both final and in-line assembly verification.

John Petry, director of product marketing for vision software at Cognex, explains that, unlike traditional machine vision, deep learning machine vision tools are not programmed explicitly. "Rather than numerically defining an image feature or object within the overall assembly by shape, size, location, or other factors, deep learning machine vision tools are trained by example,” he said.

This training of the neural network used in deep learning technologies requires a comprehensive set of training images that represents all potential variations in visual appearance that would occur during production. “For feature or component location as part of an assembly process, the image set should capture the various orientation, positions, and lighting variation the system will encounter once deployed,” , says Petry.

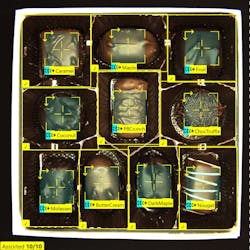

The result of Cognex’s work with industrial end users is ViDi 3.4, the company’s deep learning vision software. To understand how ViDi 3.4 solves assembly inspection challenges, Petry notes that, unlike traditional vision systems—where multiple algorithms must be chosen, sequenced, programmed, and configured to identify and locate key features in an image—ViDi’s Blue Tool learns by analyzing images that have been graded and labeled by an experienced quality control technician. “A single ViDi Blue Tool can be trained to recognize any number of products, as well as any number of component/assembly variations,” he said. “By capturing a collection of images, it incorporates naturally occurring variation into the training, solving the challenges of both product variability and product mix during assembly verification.

Explaining how ViDi’s Blue Tool is used, Petry said that, first, the deep learning neural network is trained to locate each component type. Next, the components found are verified for type correctness and location. “Deep learning users can save their production images to re-train their systems to account for future manufacturing variances,” he added. “This may help limit future liability in case unknown defects affect a product that has been shipped.”

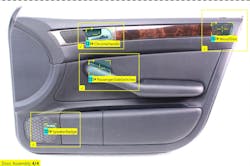

To ensure that the correct type of window switches and trim pieces are installed, ViDi 3.4 uses Layout Models. “With a Layout Model, the user draws different regions of interest in the image’s field of view to tell the system to look for a specific component—such as driver’s side window switches—in a specific location," , Petry said. "The Layout Model is also accessible and configurable through the runtime interface. No additional off-line development is required, thereby simplifying product changeovers.”

For more information about this deep learning software, Cognex offers a free guide, “Deep Learning Image Analysis for Assembly Verification.”

Leaders relevant to this article: